06/29/2025

What Comes After Revolutionizing the VFX Pipeline

If you haven’t yet, check out our recent video on how our tech artist reshaped the VFX pipeline—link below. This article picks up where the video left off, offering new insights and a peek into what’s next.

🎥 Watch the VFX Pipeline Revolution

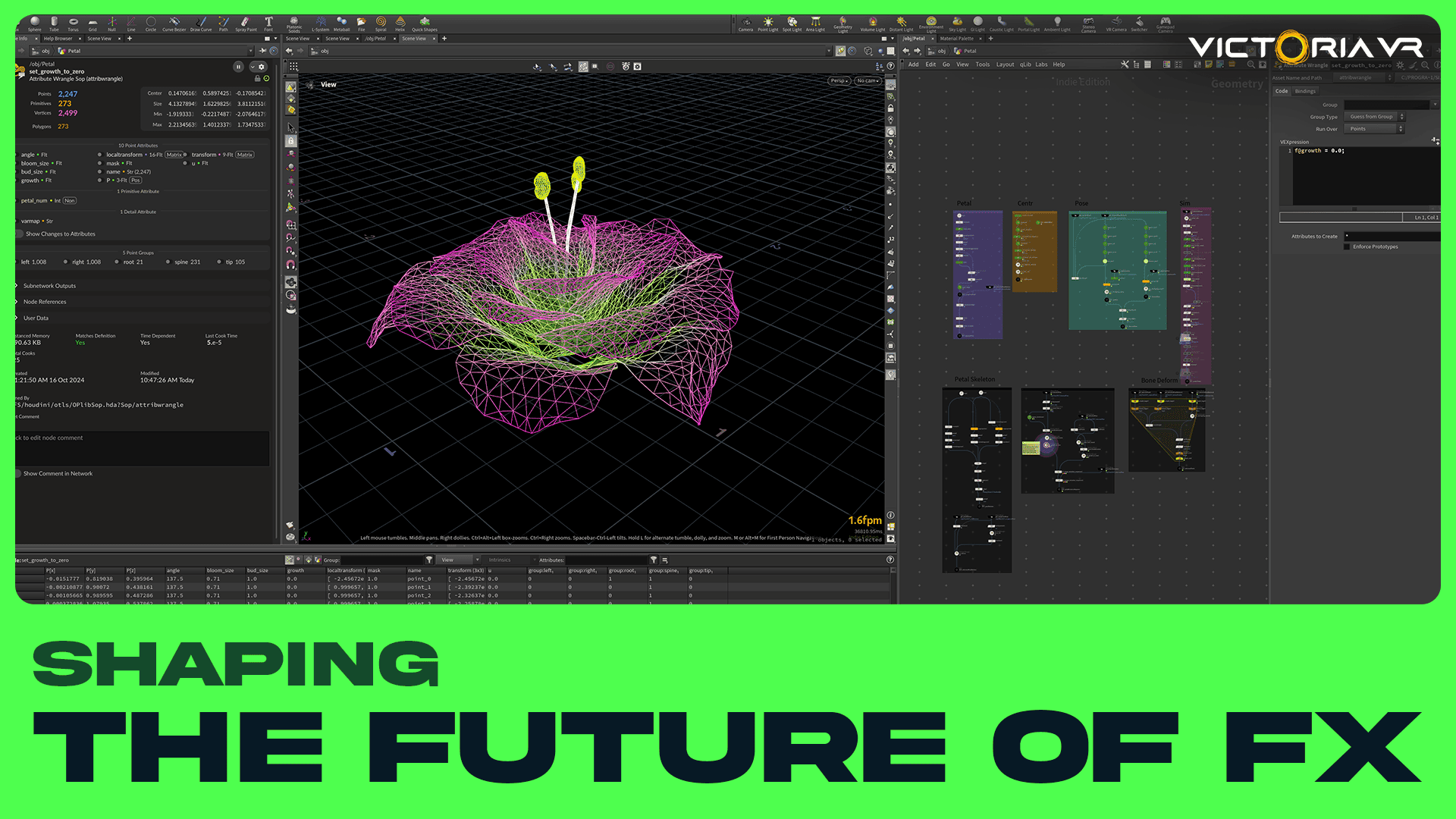

When we shared the story of how Vertex Animated Textures (VATs) brought Houdini simulations into Unreal Engine VR environments without crushing performance, it was only the beginning. Behind every crisp effect and reactive spell lies not just code—but intention, iteration, and a very human pursuit of smoother workflows.

We caught up again with Igor, our lead Tech Artist, to explore what happened after the pipelines stabilized… and where things are headed next.

Saving Time, Unlocking Potential

One quiet but crucial win? Solving the Blender-to-Unreal bottleneck. "Animated geometry was often a pain to export cleanly from Blender," Igor explains. "Now, with VATs, we can bring complex characters and prop animations in without the fuss. It saves hours—and sanity."

This integration doesn’t just eliminate errors; it widens the door for creative risks. Character artists can now focus on expression and detail, knowing the pipeline won’t flatten their intent along the way.

Time saved per model: ~3 hours. Across a whole level, that’s days recovered for actual design work.

Copernicus: A System Built for Change

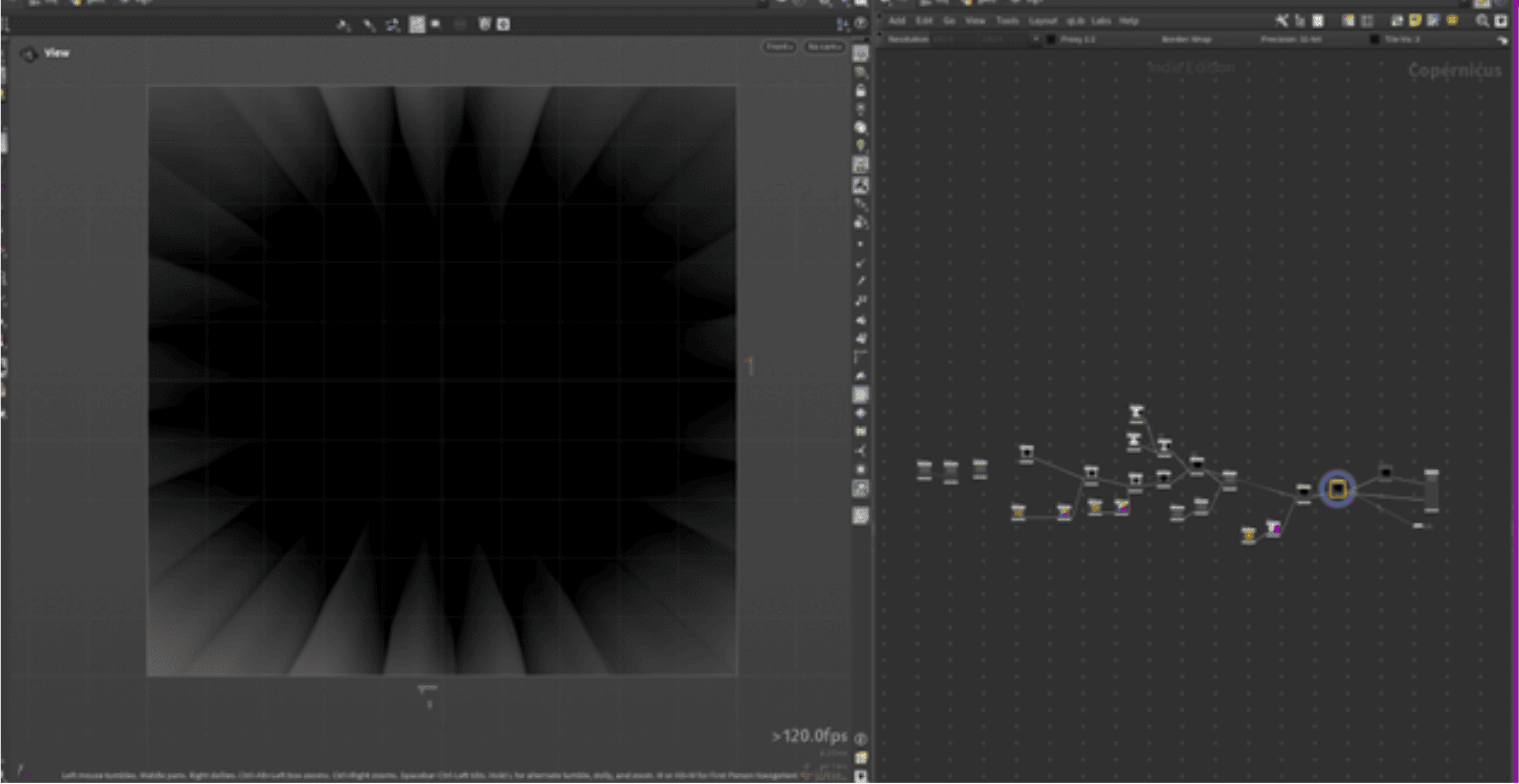

While VATs steal the spotlight, another new tool quietly reshaped the creative workflow: Copernicus, Houdini’s next-gen image processing framework.

This procedural toolkit empowers artists to quickly generate effects assets that automatically reflect concept art changes. "Concept evolves constantly," says Igor. "With Copernicus, decals and sprites evolve with it—instead of becoming outdated overnight."

This means fewer manual updates and a library of assets that feels alive, not static. For a dynamic game world, that’s a major win.

Procedural Furniture, Real Efficiency

Another behind-the-scenes breakthrough came from building procedural furniture systems for the game’s builder toolset.

Instead of manually modeling endless variants, Igor designed systems that let artists generate unique versions from a single base mesh—with sliders for size, material, and ornamentation.

One asset becomes ten. That’s huge when you’re dressing an entire city.

It’s the kind of work that doesn’t always get applause, but defines how scalable your world can be.

What’s Next? Near-Future Innovations in Tech Art

If the last year was about creating seamless pipelines, the next one is about real-time intelligence.

Here are three areas Igor—and the rest of the studio—are exploring right now:

1. Smarter VAT Systems

While VATs already allow for baked simulations in VR, future iterations may:

- Adapt in real time to gameplay variables (e.g., dynamically alter fluid flow based on wind or damage).

- Use ML-generated simulations to drastically speed up Houdini baking times.

2. Decentralized Asset Libraries

Using metadata tagging and procedural generation, Copernicus-type systems may evolve into fully self-organizing asset libraries. Imagine a sprite that knows when to update itself—or a texture that remaps based on biome.

3. Niagara AI Behavior Linkage

There’s growing interest in linking Niagara particle systems to AI logic, allowing effects to not only look reactive—but to behave with purpose. Example: fire that spreads depending on AI group tactics.

And Further Down the Road?

Distant-future dreams might sound wild, but many are already entering prototype stages:

Bi-directional DCC bridges: Not just Houdini ➝ Unreal, but Unreal ➝ Houdini with full state awareness. Artists could "pull" game world data into Houdini for high-level simulation tweaks.

Real-time VFX prototyping in VR: Building and testing effects inside the headset, bypassing monitor iterations altogether.

Generative asset design assistants: Think: ChatGPT for materials. Describe a smoke trail, get a baseline VFX system with sliders to refine.

True multi-threading, smarter algorithms, and better DCC connections—those are the pillars of what’s coming. – Igor, Lead Tech Artist at Victoria VR

The Magic Still Matters

Igor’s proudest moment this year? The Root Trap spell. A fully controllable Niagara system, built from a Houdini procedural core and enhanced by shader manipulation.

But what makes it special isn’t just how it works. It’s that the pipeline allowed it to exist. Without those time-saving back-end solutions, the effect might have been scaled down or scrapped.

A Studio That Stays Flexible

In the end, what sets our studio apart isn’t one tool or pipeline—it’s a mindset.

"Whenever something changes, we adapt. That flexibility is rare," says Igor. And it’s clear this mindset fuels more than just survival—it fuels evolution.

As real-time graphics grow more complex and development cycles tighten, this hybrid of creativity and efficiency isn’t just impressive—it’s essential.

Want to see the full picture? Watch the original video now